A brief description of the concept that includes what it does, who it is for, and where it lives (not more than a couple of sentences)

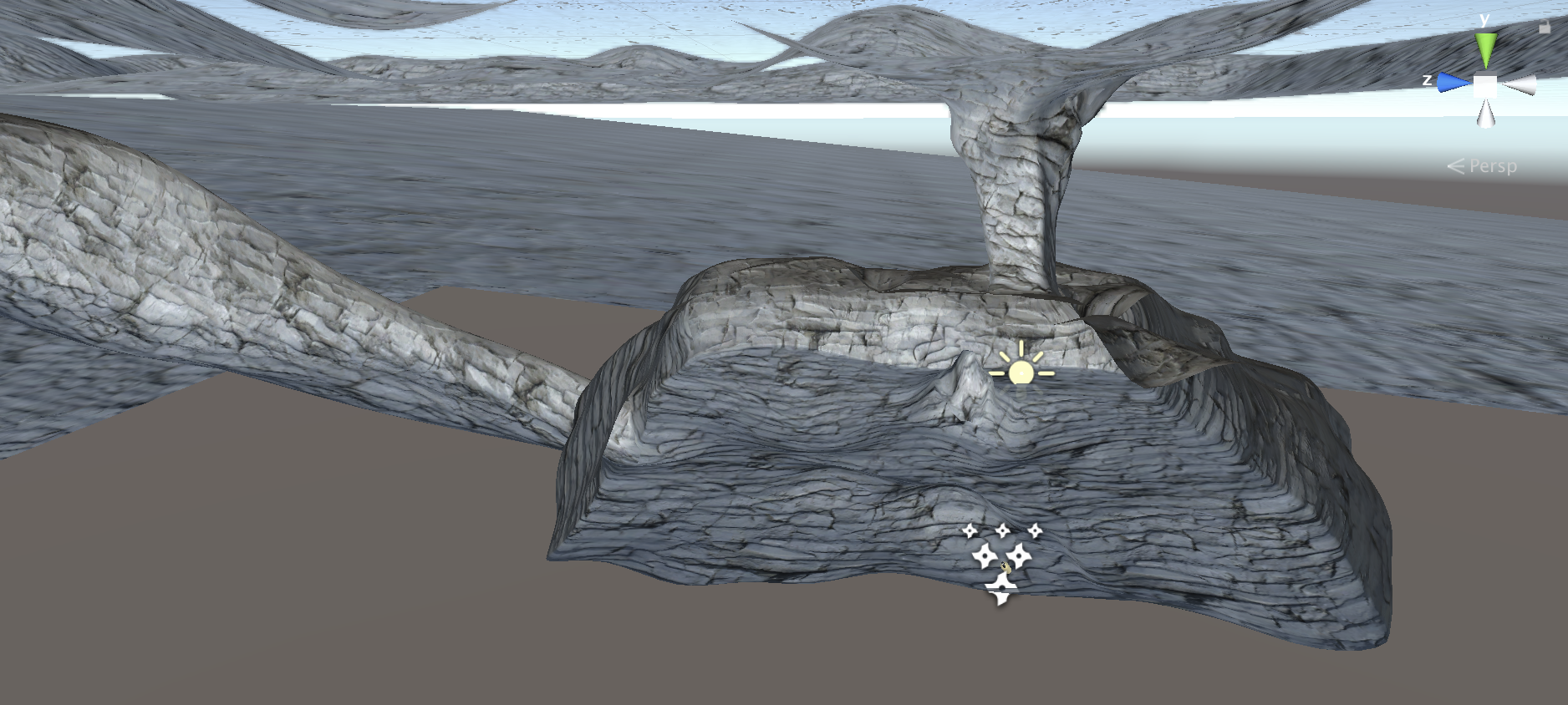

A drawn sketch (or sketches) that indicates form, materials, scale, and interaction

The song you started from

The oblique strategy you got

“My Boy” by Billie Eilish

Basic catalog of attributes:

Key: B minor

bpm: 90

drums, female vocal’s (breathiness), “ghostly sound”, synth, harmony

Random oblique strategy: “Make something implied more definite (reinforce, duplicate)

Kemi and I imagined that we work for Billie Eilish and her team. We created an installation with a supplementary mobil application. The installation features a large touch screen that with squares that highlight different aspects of Eilish’s music, emphasizing the implications of her sound. Touching the center would showcase the original sound, and moving outward to the corners breaks it down into components of what created that original sound.

We also discussed having the user input their own sounds, either speaking or singing, and have our device modify it, almost like a filter, to sound like these parts of her music.

2. Write a project prompt for yourself. You will use it to frame subsequent assignments, but it can evolve/change later. Submit it here.

Build a tool that allows one person to sound like many: I am thinking of a machine of some kind that would allow the user to sing into it and have the output harmonize with them, kind of like a one-man a cappella group.

Design a visualization of sound: For this I imagine two possibilities. 1) In my collective play course we are using web sockets. I see this as an awesome opportunity to have multiple clients input to/modify a collective outcome. I imagine singing produces colorful fuzzball that combine and mix color based on harmony. 2) this could also serve as a teaching tool for singers perhaps displaying perfect pitch versus the users so they can learn how to match it.

Create an installation that plays with the principles of acoustics: Not exactly sure what to do with this, and I’m not sure what its called, but some places are designed so if you stand in one part of a room you can actually hear what’s happening in a completely different part of the room.